数据预处理

转化为单词词组

去除停用词

去除数字

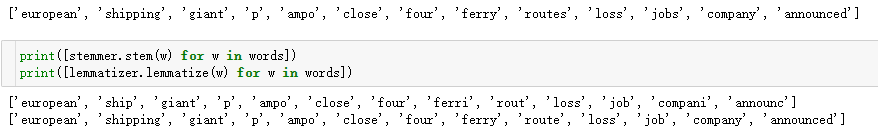

两种处理方法:词干提取和词形还原

结果比较:

可以从结果上看出,词干提取的方法直接将单词变成了原型。而词形还原更多的保留了语法的正确。

用词袋模型将训练集文本转化为向量(id 从0开始的字典)

使用贝叶斯模型进行分类

这里一共有两种模型:

7.1 计算先验概率:

P ( c ) = N ( c , text ) N ( text ) P(c)=\frac{N(c, \text { text })}{N(\text { text })}

P ( c ) = N ( text ) N ( c , text )

7.2 计算似然概率 P ( w i ∣ c ) P(w_i|c) P ( w i ∣ c )

P ( d i ∣ c ) = N ( C d i ) + 1 N ( C ) + 2 P\left(d_{i} \mid c\right)=\frac{N\left(C_{d_{i}}\right)+1}{N(C)+2}

P ( d i ∣ c ) = N ( C ) + 2 N ( C d i ) + 1

N ( C d ? ) N\left(C_{d_{?}}\right) N ( C d ? ) N ( C ) N(C) N ( C )

P ( w i ∣ c ) = N ( w i i n W c ) + 1 N ( w c ) + m P\left(w_{i} \mid c\right)=\frac{N\left(w_{i} i n W_{c}\right)+1}{N\left(w_{c}\right)+m}

P ( w i ∣ c ) = N ( w c ) + m N ( w i i n W c ) + 1

N ( w i i n W c ) N\left(w_{i} i n W_{c}\right) N ( w i i n W c ) N ( w c ) N\left(w_{c}\right) N ( w c ) m m m

7.3 计算联合概率

P ( c ∩ w i ) = P ( w i ∣ c ) P ( c ) P(c\cap w_i)=P(w_i|c)P(c)

P ( c ∩ w i ) = P ( w i ∣ c ) P ( c )

P ( text ∣ c ) = ∏ i = 1 m P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b , d i ∈ D , b = { 1 if d i ∈ text 0 else \begin{aligned}

P(\text {text} \mid c) & =\prod_{i=1}^{m} P\left(d_{i} \mid c\right)^{b}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b}, \\

d_{i} & \in D, \\

b=&\left\{\begin{array}{cc}

1 & \text { if } d_{i} \in \text { text } \\

0 & \text { else }

\end{array}\right.

\end{aligned}

P ( text ∣ c ) d i b = = i = 1 ∏ m P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b , ∈ D , { 1 0 if d i ∈ text else

P ( text ∣ c ) = ∏ i = 1 n P ( w i ∣ c ) , w i ∈ D P(\text {text} \mid c)=\prod_{i=1}^{n} P\left(w_{i} \mid c\right), w_{i} \in D

P ( text ∣ c ) = i = 1 ∏ n P ( w i ∣ c ) , w i ∈ D

7.4 朴素贝叶斯优化问题:

argmax c ∈ C ∏ i = 1 n P ( w i ∣ c ) P ( c ) \operatorname{argmax}_{c \in C} \prod_{i=1}^{n} P\left(w_{i} \mid c\right) P(c)

a r g m a x c ∈ C i = 1 ∏ n P ( w i ∣ c ) P ( c )

c text = argmax c ∈ C [ ln P ( c ) + ∑ i = 1 m ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b ] c_{\text {text }}=\operatorname{argmax}_{c \in C}\left[\ln P(c)+\sum_{i=1}^{m} \ln P\left(d_{i} \mid c\right)^{b}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b}\right]

c text = a r g m a x c ∈ C [ ln P ( c ) + i = 1 ∑ m ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b ]

c t e x t = argmax c ∈ C [ ln P ( c ) + ∑ i = 1 n ln P ( w i ∣ c ) ] c_{t e x t}=\operatorname{argmax}_{c \in C}\left[\ln P(c)+\sum_{i=1}^{n} \ln P\left(w_{i} \mid c\right)\right]

c t e x t = a r g m a x c ∈ C [ ln P ( c ) + i = 1 ∑ n ln P ( w i ∣ c ) ]

测试计算准确度

计算似然概率 P ( d i ∣ c ) = N ( C d i ) + 1 N ( C ) + 2 P\left(d_{i} \mid c\right)=\frac{N\left(C_{d_{i}}\right)+1}{N(C)+2} P ( d i ∣ c ) = N ( C ) + 2 N ( C d i ) + 1

1 p_stat = (sents_count + 1 ) / (category_sents + 2 )

测试

计算 ln P ( c ) \ln P(c) ln P ( c )

计算 ∑ i = 1 m ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b \sum_{i=1}^{m} \ln P\left(d_{i} \mid c\right)^{b}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b} ∑ i = 1 m ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b

思路:

统计一个文档中出现了哪些词,得到矩阵 B \mathbf{B} B

字典中出现过的词标注为1,未出现过标注为0。

如果该词出现过,b=1,∑ i ∈ t e x t ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b = ∑ i ∈ t e x t ln P ( d i ∣ c ) \sum_{i \in text} \ln P\left(d_{i} \mid c\right)^{b}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b}=\sum_{i \in text} \ln P\left(d_{i} \mid c\right) ∑ i ∈ t e x t ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b = ∑ i ∈ t e x t ln P ( d i ∣ c )

如果该词没有出现,b=0,∑ i ∉ t e x t ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b = ∑ i ∉ t e x t ln ( 1 − P ( d i ∣ c ) ) \sum_{i \notin text} \ln P\left(d_{i} \mid c\right)^{b}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b}= \sum_{i \notin text} \ln \left(1-P\left(d_{i} \mid c\right)\right) ∑ i ∈ / t e x t ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b = ∑ i ∈ / t e x t ln ( 1 − P ( d i ∣ c ) )

使用矩阵运算:

先计算 ∑ i = 1 m ln P ( d i ∣ c ) b \sum_{i=1}^{m} \ln P\left(d_{i} \mid c\right)^{b} ∑ i = 1 m ln P ( d i ∣ c ) b ∑ i = 1 m ( 1 − P ( d i ∣ c ) ) 1 − b \sum_{i=1}^{m}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b} ∑ i = 1 m ( 1 − P ( d i ∣ c ) ) 1 − b

∑ i ∈ t e x t ln P ( d i ∣ c ) = ∑ i = 1 m ln P ( d i ∣ c ) b ∗ B \sum_{i \in text} \ln P\left(d_{i} \mid c\right) = \sum_{i=1}^{m} \ln P\left(d_{i} \mid c\right)^{b} * \mathbf{B} ∑ i ∈ t e x t ln P ( d i ∣ c ) = ∑ i = 1 m ln P ( d i ∣ c ) b ∗ B

∑ i ∉ t e x t ln ( 1 − P ( d i ∣ c ) ) = ∑ i = 1 m ( 1 − P ( d i ∣ c ) ) 1 − b ∗ ( 1 − B ) \sum_{i \notin text} \ln \left(1-P\left(d_{i} \mid c\right)\right)= \sum_{i=1}^{m}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b} * (1-\mathbf{B}) ∑ i ∈ / t e x t ln ( 1 − P ( d i ∣ c ) ) = ∑ i = 1 m ( 1 − P ( d i ∣ c ) ) 1 − b ∗ ( 1 − B )

∑ i = 1 m ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b = ∑ i = 1 m ln P ( d i ∣ c ) b ∗ B + ∑ i = 1 m ( 1 − P ( d i ∣ c ) ) 1 − b ∗ ( 1 − B ) \sum_{i=1}^{m} \ln P\left(d_{i} \mid c\right)^{b}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b} = \sum_{i=1}^{m} \ln P\left(d_{i} \mid c\right)^{b} * \mathbf{B} + \sum_{i=1}^{m}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b} * (1-\mathbf{B}) ∑ i = 1 m ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b = ∑ i = 1 m ln P ( d i ∣ c ) b ∗ B + ∑ i = 1 m ( 1 − P ( d i ∣ c ) ) 1 − b ∗ ( 1 − B )

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 for i, words in enumerate (data_x): b = np.zeros(len (dictionary)) for word in set (words): if word in dictionary: word_id = dictionary[word] b[word_id] = 1 b = np.expand_dims(b, 1 ).repeat(len (categories), 1 ) _b = np.ones(b.shape) - b p = np.log(p_stat) * b + np.log(1 - p_stat) * _b p = np.sum (p, axis=0 ) p += p_c

计算 c text = argmax c ∈ C [ ln P ( c ) + ∑ i = 1 m ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b ] c_{\text {text }}=\operatorname{argmax}_{c \in C}\left[\ln P(c)+\sum_{i=1}^{m} \ln P\left(d_{i} \mid c\right)^{b}\left(1-P\left(d_{i} \mid c\right)\right)^{1-b}\right] c text = a r g m a x c ∈ C [ ln P ( c ) + ∑ i = 1 m ln P ( d i ∣ c ) b ( 1 − P ( d i ∣ c ) ) 1 − b ]

1 2 if np.argmax(p) == categories[data_y[i]]: count += 1

计算似然概率 P ( w i ∣ c ) = N ( w i i n W c ) + 1 N ( w c ) + m P\left(w_{i} \mid c\right)=\frac{N\left(w_{i} i n W_{c}\right)+1}{N\left(w_{c}\right)+m} P ( w i ∣ c ) = N ( w c ) + m N ( w i i n W c ) + 1

1 p_stat = (words_frequency + 1 ) / (category_words + len (dictionary))

测试

计算 ln P ( c ) \ln P(c) ln P ( c )

计算 ∑ i = 1 n ln P ( w i ∣ c ) \sum_{i=1}^{n} \ln P\left(w_{i} \mid c\right) ∑ i = 1 n ln P ( w i ∣ c )

1 2 3 4 5 6 7 8 9 for i, words in enumerate (data_x): p = np.zeros(len (categories)) for word in words: if word in dictionary: word_id = dictionary[word] p += np.log(p_stat[word_id,:]) p += p_c

测试 c t e x t = argmax c ∈ C [ ln P ( c ) + ∑ i = 1 n ln P ( w i ∣ c ) ] c_{t e x t}=\operatorname{argmax}_{c \in C}\left[\ln P(c)+\sum_{i=1}^{n} \ln P\left(w_{i} \mid c\right)\right] c t e x t = a r g m a x c ∈ C [ ln P ( c ) + ∑ i = 1 n ln P ( w i ∣ c ) ]

1 2 if np.argmax(p) == categories[data_y[i]]: count += 1

TF

Bernoulli

stemmer

9479/10208 92.86% 9484/10208 92.91%

lemmatizer

9550/10208 93.55%% 9551/10208 93.56

结果与实验手册中保持一致

缺失项

stemmer+TF

Bernoulli

小写

Accuracy: 9480/10208 92.87%

Accuracy: 9480/10208 92.87%

标点

Accuracy: 9463/10208 92.7%

Accuracy: 9470/10208 92.77%

停用词

Accuracy: 9451/10208 92.58%

Accuracy: 9449/10208 92.56%

数字

Accuracy: 9500/10208 93.06%

Accuracy: 9504/10208 93.1%

词干提取

Accuracy: 9587/10208 93.92%

Accuracy: 9595/10208 93.99%

全部缺失

Accuracy: 9569/10208 93.74%

Accuracy: 9574/10208 93.79%

**结论:**缺失部分预处理操作对结果的影响不是特别大

源码:https://github.com/laptype/Machine-Learning-Code/tree/main/Task 3_Bayes